Hardware Confusion 2019

[Page 9] The GPU & Monitor

Graphics Card: EVGA GeForce GTX 970 SC 4GB

Monitor: 24" Samsung 2443BW LCD

If you're looking for advice as to which GPU is best for gaming right now, unfortunately I won't be much use to you. I don't game on my PC anymore, so I'm out of the GPU arms race; I continue to use the perfectly functional EVGA GTX 970 I purchased in late 2014. It should still have plenty of life left in it for my purposes, but one of the nice aspects of my Intel i7 9700K CPU is that it comes with an Integrated Graphics Processor (IGP), albeit one that's not useful for gaming - see the System Core section earlier in this article. The IGP is perfectly fine for desktop use though, so I've got a backup when my GTX 970 bites the dust.

A key reason I'm more than happy to keep the GTX 970 GPU isn't because I save money, it's because it has one very important feature: a complete lack of noise. If you read what I wrote in my Hardware Confusion 2009 article regarding the GTX 285 GPU I'd purchased at that time, I had to go through two replacement cards before I got one that had minimal coil whine. Back in 2009 few people said much about coil whine. It wasn't a commonly-used term, and it took me a while to even find the correct name for it; in the article I referred to it as a buzz or high-pitched squeal, and could only link to a few videos and discussions that backed up my claims. Those of you who've experienced it know just how annoying it can be. There's no easy fix, it's partly luck and partly a case of checking reviews and user feedback to see which brands are afflicted more often with it on the latest generation of cards.

When I received this GTX 970 and discovered it had no noticeable coil whine, I was thrilled. I also knew that this model has a fan that switches off completely when the GPU is less than 60C. This means that during normal usage, this card literally makes no discernible noise. Perfect! Well not quite - when you get a PC down to the point where it's genuinely almost dead silent, you discover little things you've never noticed before. Enter my ten year old Samsung monitor, ready to fill the silence with a noise all of its own.

Interlude: Listen to your TV

Here's a great reason why CCFL backlights are no longer used in most monitors: they suck. They use a lot more electricity than LEDs, they have lower color accuracy, and as I discovered after I put together and fired up my new system, they can make a low-level buzzing noise. I'd never heard this noise on my system, and initially, I could swear it was coming from the back of my PC, so I assumed it was either the new PSU, or some component on the new motherboard. The mystery was quickly resolved however when I would walk into the room after an extended length of time away from my PC and find it dead quiet - but the second I moved my mouse and woke the screen up, the buzzing would restart.

A quick bit of research nets this popular article clarifying the cause of the problem as our old friend coil whine, this time likely emanating from the cheap inverters that Samsung used on this monitor to control the backlight. I did as the article suggested, namely to raise the panel's brightness. Sure enough, when I got to 100% Brightness, the buzzing immediately stopped. If I go below 98% or so it quickly jumps back up to full volume. Normally, I use 60% brightness and 40% contrast on this monitor, which gives it a clean but mellow look. The recommended solution of 100% Brightness is, to my eyes, only slightly less uncomfortable than staring at the Sun. I tried to use the software image controls found in the Nvidia Control Panel to compensate, and did eventually find a level that (mostly) counteracted the high backlight brightness. Alas, running the panel at 100% brightness isn't viable. It increases the monitor's power usage substantially, in turn converting the screen into a radiator. I began sweating just reading news articles. Also, did I really want to run a 10 year old monitor at its peak power usage? Seems like a quick way of administering the Coup De Grace to this panel.

Ultimately I returned to my previous settings, and as the weather has cooled down here, and quite possibly as my ears have become used to it, the buzz is much less noticeable. It's definitely there though. It's unlikely you'll have the same issue if your monitor is a newer LED, but the real moral of this story is that if you get your system silent enough, then you may find some interesting surprises awaiting you. It's rare to have completely quiet electronics these days.

Display Technology

Going into this build, I knew the monitor would be a difficult buy. The words "cheap" and "good quality" rarely occur in the same sentence when referring to displays of any kind. With any display, ranging from phones and tablets, through to PC monitors and TVs, you can usually see where the money goes. Whether it's color accuracy, response time, size, resolution, black levels, refresh rate, etc. skimping on a display can result in any one of these factors being less than you expected, and can result in buyer's remorse. Frankly, if you're looking to drop some decent coin on a monitor, now is not a good time to buy, because everything is in flux.

Oddly enough, it's not the choice of display technology that will cause problems. While displays for TVs are transitioning towards newer technologies like OLED (Organic Light Emitting Diode), that doesn't apply to PC monitors. OLED isn't readily available in dedicated PC monitor form, so unless you repurpose an OLED TV, it's not a consideration. On a side note, in terms of display technology, OLED is most definitely the best choice, with more natural color and motion, true blacks and thus unbeatable infinite-level contrast ratio, faster response and no off-angle viewing issues, it's the display of choice for most applications in terms of image quality.

Screen burn-in (permanent image retention) is still a consideration though, especially for a PC monitor, which often displays bright static content for extended periods, such as the Windows Desktop or game HUDs. Yet, as with plasma, stories of the ease with which OLED can experience screen burn-in are greatly exaggerated. Real-life burn in torture tests such as this one show that it takes almost an entire month of continuously displaying bright static logos for 20 hours per day before even a hint of burn-in appears on an OLED. If you can afford one, an OLED screen is the technology of choice for your next TV, and even your next phone. But for monitor duties, we're not there yet.

That makes it a choice between LCD and LED. Except that LCD and LED monitors are essentially the same basic technology, the only difference is that the light source behind the array of liquid crystals is LED (Light Emitting Diode)-based in TVs bearing their name, whereas LCD monitors, like my old Samsung, have a Cold Cathode Fluorescent Lamp (CCFL) backlight and are rarely used these days. By a process of elimination, you now know that your choice is an LED monitor.

When selecting an LED, the key determinants are resolution, refresh rate, and panel type.

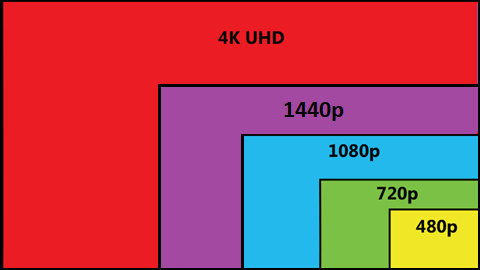

4K: The New Standard

I'm sure you already know that the standard resolution of displays is moving from 2K, which is 1,920 x 1,080 pixels, also known as 1080p or High Definition (HD), to 4K, which is 3,840 x 2,160 pixels, also called 2160p or Ultra High Definition (UHD). People mistakenly think that 4K is twice the resolution of 2K, but the 'K' is a shorthand way of referring to the approximate number of horizontal pixels only; 2K images are 1,920 pixels in width, 4K has 3,840 pixels of image width. But since both the height and width of the image has doubled, this results in four times the total number of pixels samples displayed in 4K content compared to 2K. If you can play a game at reasonable framerates at 1920x1080 resolution, then switching to a 4K monitor and using its native resolution means your GPU has to push four times the number of pixels in terms of data while trying to maintain playable framerates, a big ask for any current GPU.

A great way to get a fairly accurate idea of the kind of performance you'd get on a new 4K monitor with your current system is to use Nvidia DSR or AMD VSR. These features allow you to raise the internal resolution at which the graphics card renders frames up to full 4K, and then automatically downscales it to your monitor's native resolution, allowing the image to be displayed. Because the GPU is rendering at full 4K resolution, DSR/VSR provides an almost perfect simulation of how your games will perform on a monitor with that native resolution.

Given the high cost and potentially painful performance drop when going to full 4K, many gamers have opted for a hybrid display resolution known as 1440p (typically 2,560 x 1,440 pixels), also referred to as QHD (Quad High Definition). This is a reasonable option if you must get a new monitor, but it's not ideal, because 4K (2160p) is definitely the new standard, and 1440p is a non-standard resolution. I know this scenario well, because my 24" Samsung monitor has a 1920x1200 (1200p) resolution, a non-standard PC resolution originally designed to provide additional height to improve reading and editing text content. However, aside from slightly reducing my performance in games, this hasn't caused any significant problems for me because standard 2K 1080p content, such as movies, can be displayed on my monitor at their native size without being rescaled - all that happens is that two thin black bars appear above and below the image.

But that's not the case with 1440p and 4K content; 4K (3840x2160) content doesn't fit into a 1440p's native resolution of 2560x1440, so the content has to be downscaled if it's fixed resolution such as movies or TV, meaning you're not watching native 4K. Games aren't a problem because the popularity of 1440p ensures that they support this resolution. A benefit of 1440p, besides better performance than full 4K, is that on smaller screens, the interface looks larger at native resolution. A 28" 2560x1440 monitor for example will have easier-to-view text and images than a 3840x2160 native resolution on a monitor of the same size. Of course, Windows allows you to rescale the interface independently of the resolution, but this provides mixed results.

The resolution dilemma is a real one, and there's no simple solution. For the most part, 1080p is out unless either you're using a 1080p monitor you already own, or are only after a dirt cheap new monitor; 1440p is likely the best compromise if you're on a tight budget and especially if you're a gamer, but it's not true 4K and is not a sound long-term investment; a real 4K monitor is the best choice for longevity, but requires a hefty investment, even more if you're a gamer as you'll need a powerful GPU.

Interlude: Windows Scaling & Clarity

A frequent complaint by Windows 10 users is the way text and even images can seem quite blurry. This can occur in a range of scenarios, most commonly when using a high resolution (4K) monitor, or more than one monitor. Microsoft is aware of these problems, and provides an explanation in this article, along with a range of solutions in this article. There's no simple fix for this issue, it depends on a number of factors. Here are some things you can check to try to improve image quality on your screen. The main scaling options in Windows 10 can be found under Settings>System>Display, or by right-clicking on the Desktop and selecting Display settings, so let's start there.

Make sure your Windows display resolution is always set to your monitor's native (max) resolution. For example, my monitor is 1920x1200 native, so the Resolution box should show 1920x1200 as well, which it does. Any Windows or game resolution other than your native one will automatically result in some blurring, no matter what you do.

The Scaling box should be set to 100%. If this results in text and/or images that are too small for your eyes, you can increase it but bear in mind that once again, any scaling will result in unavoidable blurring to some extent. Importantly, more recent versions of Windows 10 have added the ability to set scaling on a per-program basis, which means that if you only want the text or images in specific program(s) to change, you can control these by right-click on the icon for that program, selecting Properties, and under the Compatibility tab, clicking the 'Change high DPI settings' button.

In your graphics card's control panel, you should ensure that there is no additional scaling being applied. For Nvidia users for example, open the Nvidia Control Panel, and under the Adjust Desktop Size and Position section, select No Scaling; also set the Perform Scaling On setting to GPU, though obviously if there's no scaling, the setting does nothing. This ensures that the image isn't being automatically rescaled at any time by the graphics driver.

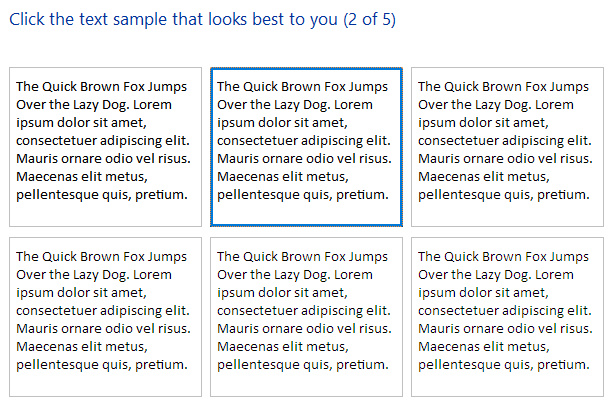

Most important of all, to optimize the clarity of text on your display, open Settings, type ClearType in the Search box and select Adjust ClearType text, or use WIN+R cttune.exe and press Enter. Make sure the Turn on ClearType box is ticked, then click Next and complete the tuner to see if you can reach a satisfactory text appearance for your needs. ClearType is an anti-aliasing method (a way of reducing jaggedness) that can make a substantial difference to the appearance of text on LCD-based screens. Note that it's the same as the 'Smooth Edges of Screen Fonts' option found under Control Panel>System>Advanced System Settings>Performance Settings.

Lastly, back under Settings>System>Display, click the Advanced Scaling Settings link, and you can experiment with the 'Let Windows Try to Fix Apps' option, though I recommend disabling it because, once again, any (re)scaling that is applied to an image always degrades it in some way.

The information above should make it clear to you that you need to spend some time determining the optimal mix of native resolution and screen size for your needs. A 4K monitor that's too small (e.g. a 24" or smaller 3840x2160 display) can result in uncomfortably tiny text at native resolution.

Panel Type

The panel type refers to the way in which the liquid crystal array that filters light from the LED backlight behind it is arranged:

TN: Twisted Nematic (TN) panels are the type used in most cheap LCD/LEDs, especially older ones. They provide high refresh rates for gamers and lower prices, at the expense of often noticeable differences in light output as you view them from any angle except dead center, along with greyish blacks and less vibrant colors.

VA: Vertical Alignment (VA) panels allow for much better viewing angles, improved colors and better contrast than TN-based displays, but they typically don't have the refresh rates needed for gaming.

IPS: This is generally the best panel type for a PC monitor, especially if you game. They can provide sufficiently high enough refresh rates, as well as good viewing angles, more accurate colors and good contrast, to suit every need.

Refresh Rates

The refresh rate on a display is the number of times per second that the monitor can redraw the entire screen, measured in Hz. For example, a 144Hz display can refresh its screen 144 times each second. Refresh rate is not the same as framerate/FPS, which is the number of times per second your graphics card can produce a new whole frame of graphics data to send to the display. But they do have a complex relationship with each other, and I've covered this on this page and this page of two guides I wrote a while back.

Whenever your monitor and GPU are not perfectly synchronized, a harmless but annoying anomaly known as tearing can occur. In the past, a software fix known as Vertical Synchronization (VSync) was the main method used to prevent tearing, but VSync can cause issues of its own because it makes the GPU a slave to the monitor in order to synchronize the two, and in doing so, can reduce your overall performance and cause input lag.

For both gaming and viewing movies on a display, the higher the refresh rate, the better; moreso for gaming. However, the higher the refresh rate, the bigger the potential for a drop in performance if your GPU can't consistently match the monitor's high refresh rate.

In recent years, both Nvidia and AMD have come up with proprietary hardware-based solutions to this problem, known as Gsync and FreeSync respectively. These make the monitor a slave to the GPU, removing tearing without the need for VSync. But to utilize this solution, you need to buy a monitor with the relevant technology for your GPU - an Nvidia GTX owner for example needs to buy an Nvidia GSync-capable monitor. Most recently, Nvidia issued drivers that provide FreeSync support, allowing their GPUs to also tap into the Adaptive Sync capabilities of AMD's FreeSync monitors. So now, whether an Nvidia or AMD user, an Adaptive Sync capable monitor is fine. While it's not essential, GSync/Adaptive Sync/FreeSync is highly desirable for gamers, especially if you want to future proof your monitor.

Monitor Choices

I spent some time looking around for the types of monitors that were available, in terms of the various combinations of the features listed above, at what sizes, and critically, at what prices. The following are my notes from that exercise, purely to show you the options I found and what I discovered about them; the selection of monitors and prices in your area may very well differ, and more importantly, so will your personal budget and priorities.

Initially, I put the ASUS VP28UQG on my list because a true 4K (3840x2160) display at 28" size seems reasonable, it supports Adaptive Sync, and the price is quite low. The main downsides are that it's 60Hz and TN. Then I stopped and remembered that I don't game anymore, so it's rather silly of me to buy a relatively inferior TN-based display, when I should be aiming for better contrast and color accuracy, so ideally an IPS panel. I looked around for some more true 4K displays to consider:

Again, this list is specific to my needs, which puts greater emphasis on future-proofing for 4K content and better colors and contrast, over higher refresh rates. I was foolishly under the impression that monitor prices might have come down, but they haven't. Even settling for a 4K display based on 60Hz TN technology would set me back quite a bit, for little gain over what I already have: a 2K 60Hz TN display. My decision came down to keeping the existing 24" Samsung I'd been using these last 10 years, or spending upwards of 50% more than what I'd already decided to drop on the rest of my system, just for a new monitor. I chose to keep my existing monitor. It's working perfectly well (a little CCFL buzzing aside), looks brand new, and although it's hardly spectacular, I've been happy with the image quality so far. Plus if it does die, it won't harm anything else, so I can let it run to failure while I wait for 4K monitors to improve in price and features.

If you don't have the luxury of just opting out of buying a new monitor like I have, then in a pinch, I would recommend buying a cheap 1440p (QHD) TN monitor to tide you over for the next couple of years - save even more by choosing a 60Hz model if you don't game. That shouldn't cost you very much, and will serve well until cheaper options come out, or you find the money for a better display. This article gives you an idea of the price range for the gaming monitors currently available, and at the time of this writing, a top quality no-compromises 4K IPS gaming monitor can set you back upwards of $1,700US, and that's just for a 27" size, whereas a decent 1440p TN monitor in the same size can be had for around $360US.

8K is Coming

Just as many of us are getting our heads around the cost of transitioning to the 4K standard, along comes the news that 8K displays are here, and will soon be ubiquitous. 8K is 7,680 x 4,320 pixels, or 4320p. Technically, it's true to say that because our eyes don't perceive information in terms of pixel samples, the higher the resolution, the closer it gets to what we actually see in the real world. But in practical terms, I can't argue with anyone who thinks that even 1080p is good enough for most needs, much less 4K, so a jump to 8K seems ridiculous right now. It's not the average consumer who's driving the demand for these products, and even among enthusiasts, 8K is hardly a must-have. It's the fact that manufacturers need to generate sufficient change as frequently as possible to drive sales of their hardware.

Setting aside the fact that we've only just reached the point where most entertainment content, such as movies and TV shows, are at 2K resolution at reasonable bitrates on popular services like Netflix, and some decent content is available at 4K resolution, 8K as a standard for PC gaming in particular is quite simply insane. There are few GPUs that can achieve smooth framerates for 4K gaming right now. 8K can quadruple the load of 4K, which means it'll probably be another 3-5 years before true 8K capable GPUs start to emerge, and lord knows at what prices.

The next section covers the most glamorous aspects of any new system: the input peripherals, audio and optical drive.