Hardware Confusion 2009

[Page 6] My Choices (Pt.3)

RAM

My Choice: 6GB (3 x 2GB) G.Skill 1333MHz PC 10666 CL9 DDR3

While G.Skill is generally recognized as a good brand of RAM, and as I noted under the Motherboard section, is also certified by ASUS to run without problems on my P6T Deluxe motherboard, some people may wonder why exactly I bought what appears to be such an average-performing RAM package - DDR3 RAM that's rated for 1333MHz and runs at 9-9-9-24 default timing is certainly not exceptionally fast. Several factors made me settle for this RAM, not the least of which were availability and price. The 1333MHz speed on this RAM is a deliberate choice, because as I've noted under the CPU section, I knew for certain I won't be overclocking my system. A stock Core i7 920 system runs DDR3 RAM at 1066MHz, so RAM rated at 1333MHz certainly more than meets the stock requirements. However the somewhat relaxed default timings (9-9-9-24) might be a potential cause for concern, especially considering there are DDR3 modules which can do 7-7-7-20 timings by default at 1333MHz. Did I make a major performance blunder with my choice of RAM?

This is a question only research can answer. As this Core i7 Memory Performance article and this G.Skill DDR3 Review reveal, three DDR3 modules in Triple Channel can provide a potentially huge amount of memory bandwidth, up to 27GB/s at 1600MHz. However even at the default speed of 1066MHz there's 20GB/s on tap should the system need it. As you start to read through the benchmarks in these articles, you'll quickly begin to see that in real-world applications and games, the performance difference between stock 1066MHz and overclocked 1600MHz DDR3 speeds on a Core i7 platform is not significant. Specifically, the difference between 1333MHz and 1600MHz is negligible, particularly for gaming; only synthetic benchmarks show a marked difference. Next, memory latency differences prove slightly more important, but not to a major degree - the practical difference between 9-9-9-20 and 7-7-7-20 timings for example is less than 1 FPS in games like Far Cry 2 running at 1920x1200. Finally, even Single, Dual or Triple channel modes seemed not to have a major impact on performance, once again only synthetic benchmarks showed a real difference.

This then demonstrates that choosing 1600MHz memory is not necessarily a wise choice. The extra bandwidth is not going to make any significant difference to performance on a Core i7-based system, and the price for 1600MHz memory can often be double that of 1333MHz RAM. In my opinion, unless you're an extreme overclocker, it seems much wiser to spend the extra money on buying lower latency and/or more RAM. While 3GB (3 x 1GB sticks running in Triple Channel) is fine for current needs, I would recommend 6GB of RAM or more for better future proofing, and to prevent winding up with a 'mix and match' RAM setup in the future if you choose to upgrade your RAM; identical sticks of RAM manufactured at roughly the same time are always best for ensuring optimal stability. The money you save by buying 1333MHz or even 1066MHz RAM rather than the 1600MHz modules should allow you to double your RAM quantity, and more RAM will provide far greater improvement in terms of smoother gaming and desktop use when combined with a 64-bit operating system, as I demonstrate later.

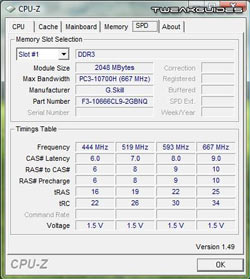

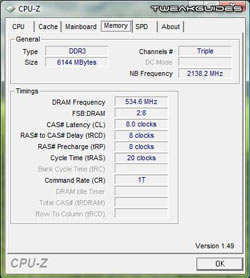

Latency is the issue that still worried me somewhat, because although the gains are extremely small, I still would have preferred getting 7-7-7-20 (CL7) RAM than 9-9-9-24 (CL9) RAM. This is where availability and price came into play - I simply couldn't find a good package of 6GB of CL7 RAM for a reasonable price, and I wasn't prepared to pay a lot more money just for a small drop in latency, so ultimately I settled for the CL9 stuff. However it turns out that all is not lost. As CPU-Z below shows, because my RAM modules are rated for CL9 only at their full 1333MHz speed, at stock Core i7 speeds of 1066MHz they have better default timings. The SPD table on my RAM is shown below (left), and it turns out that at the stock speed of 1066MHz (2 x 533MHz because it's DDR) the RAM is actually doing 8-8-8-20 (right):

As the benchmarks and reviews show, 8-8-8-20 is pretty much identical to 7-7-7-20 in real-world performance, which is good enough for me. Even though it seems logical, I can't claim that I knew in advance that this RAM would do better default timings at stock speeds, I didn't research it quite that thoroughly, but fortunately things have worked out just fine.

Once again, the discussion above shows that there's no need to automatically assume that buying the highest speed or highest priced product to give yourself "plenty of headroom" is necessarily a wise investment. Do some research to see whether all that extra speed and latency really makes a difference in practice. The money you save from buying slightly slower-rated RAM can allow you to buy more GB of RAM for example, which in provides far greater benefits to any system.

Graphics

My Choice: Leadtek Nvidia GeForce GTX 285 1GB

I've been buying graphics cards for over ten years, and as such I've used several different models: TNT2, Viper II, GeForce 2, GeForce 3, Radeon 9700 Pro, Radeon 9800 Pro, GeForce FX5600 (donated), GeForce 7800GTX, and GeForce 8800GTS. This is the first time I've found the decision of which graphics card to buy to be moderately vexing, at least when examining the upper end of graphics card choices. The reason is that for the first time ever, instead of releasing a single GPU solution which clearly dominates the charts, both ATI and Nvidia have released dual-GPU graphics cards as their highest offerings. Not to mention of course that you can combine several graphics cards in SLI or CrossFire mode to get improved performance. Which configuration is the best value for money? Which will best meet my needs and priorities?

Both the ATI Radeon HD4870 X2 and Nvidia GeForce GTX 295, although coming as two GPUs on a single card, still rely on CrossFire and SLI technology respectively to work, and in some ways, this actually made my choice much easier. You see I still don't put a great deal of stock in either CrossFire or SLI. Remember that my number one priority in building this system is stability, and as a corollary to that, hassle-free functionality. CrossFire and SLI cannot yet be called 'hassle free' by any stretch of the imagination. This is because whenever a game or the drivers don't support CrossFire or SLI properly, performance will not necessarily scale well enough to fully utilize both GPUs. Furthermore, there are various quirks which both SLI and CrossFire bring with them, including strange framerate drops and the very undesirable 'microstuttering', which is explained in more detail in this article.

Another important concern for me, at least based on the priorities I outlined earlier, is the heat and noise impacts of multiple GPU configurations (whether as separate cards or on a single card). The GPU is already one of the hottest components in a case, and adding a second GPU which can sometimes sit fully or partially idle but still pump out additional heat doesn't seem like a particularly elegant way of trying to get extra performance in my opinion. They radiate extra heat in the case, requiring greater fan speeds from other components to compensate for the additional heat, which in turn equals more noise. But in and of themselves, the multi-GPU cards can also create quite a racket. Dual-GPU cards like the GTX 295 and HD4870 X2 are significantly louder than a single-GPU card. For example, drawing noise figures in decibels (dB) from this review and this review, we see that a single GPU GTX 285 comes in at 38.8 dB at idle and reaches 44.8 db under load. The GTX 295 on the other hand starts at 44 dB at idle, and ramps up to a very loud 53.1 dB under load; similarly the HD4870 X2 starts at 45 dB at idle and hits around 51 dB under load. Just to give you an indication of what that can sound like, check this YouTube Video to hear an HD4870 X2 fan eventually ramping up to full speed by the end of the video. I don't care how well it performs, even at lower speeds it's noticeably loud, and by full speed it's just ridiculously loud in my opinion.

So on paper a multi-GPU solution, whether a single card solution like the GTX 295 or HD4870 X2, or a separate pair of cards in SLI or CrossFire, might appear to be a great performance solution. But in practice, there are driver quirks, heat issues, and loud noise to contend with, not to mention the higher cost. For some people these concerns aren't particularly important, but to me they were, so I decided against a multi-GPU setup of any kind. This reduced my options down to a single card solution: either the GeForce GTX 285, Nvidia's fastest single GPU product, or a Radeon HD 4870, the fastest single card from ATI. Looking at a range of reviews like this one, I quickly saw that although more expensive, the GTX 285 is also noticeably faster than any other single card at my gaming resolution of 1920x1200, particularly in the games I play and those games which I believe are indicative of the most commonly used game engines right now: Far Cry 2 (Dunia engine), Crysis Warhead (CryEngine 2), Mass Effect (Unreal Engine 3) and Left 4 Dead (Source Engine). I also noted that it won't be for several months - likely 4-6 months or more - before the next generation high-end GPUs appear from ATI or Nvidia, which made my decision even easier.

There's also something else to consider. Some people may disagree with me, but I believe that upcoming PC games are not necessarily going to need as much GPU power as we might expect. This is because PC games development right now is tied very closely to the development of games for the current 'next-gen' consoles - I discuss the rationale behind console-centric games development in more detail in this section of my PC Game Piracy Examined article. I believe that one of the reasons why 'multiplatform' games like Far Cry 2 and GTA IV are more CPU-dependent is because developers have had to turn to trying to leverage the existing multi-core CPU power of the consoles as opposed to their somewhat ageing GPUs, and this translates to more CPU-dependent PC games. The XBox 360 for example has an ATI Xenos graphics solution equivalent to a cross between the X1900 and HD2900 series graphics cards from several years ago. At the same time however it has a powerful triple-core 3.2GHz IBM Xenon CPU. Clearly in today's terms, the XBox 360's CPU is the component which can provide greater potential performance, hence the CPU is being put to greater use in games by developers until the next generation of consoles come out around 2011-12.

Of course I could be wrong, there's no way anyone can know for sure what upcoming games will require. A worst case scenario would be that PC gamers keep getting poor quality console ports which are more stressful on both the CPU and GPU simply by virtue of not being properly optimized for the PC platform for example. Still, I tend to suspect that for a range of reasons, including the 'consolization' or 'multiplatform' trend I note above, not to mention the trend towards trying to capture more of the mainstream market, that the end result will be less graphically strenuous games. Just looking at Crytek's experience with Crysis tends to confirm this in my mind: in late 2007 they released a cutting-edge PC game which pushes any PC to its knees at maximum settings, even to this day, and as a result they did not have the same commercial success as less complex mainstream-orientated games. Even PC gamers themselves criticized Crytek for releasing such an advanced game, despite the fact that Crysis can scale incredibly well to suit low-end systems. As a result it seems very unlikely that any other developer will follow in their shoes any time soon. I suspect the days of games which push graphical boundaries the way PC games of yesteryear did are pretty much over - again, at least not until the next generation of consoles arrive.

On balance I decided on the Nvidia GeForce GTX 285. And let me say, it does an absolutely amazing job. I run games at 1920x1200 - my native resolution (See the Monitor component for details) - and not only can the GTX 285 run the latest games at that resolution at maximum settings, it has enough power to spare to allow very high Antialiasing and Anisotropic Filtering in most cases to boot. For example:

One of the factors which actually caught me by surprise is that gaming on this system results in perfectly smooth framerates. When I say smooth, I really do mean 100% uninterrupted smooth framerates from the moment the game loads up. This is because the system is not bottlenecked by any component at all. The CPU is never remotely under stress, the hard drive is very fast, and when combined with a 64-bit OS with 6GB of RAM, and a high-end graphics card also with 1GB of onboard VRAM, that equates to absolutely no room for stuttering or loading pauses. I can't convey how stunning the games look on the screen when maxed out and often with maximum AA/AF as well, and yet still play so smoothly. It makes things so much more immersive. I provide a YouTube video which demonstrates this at the end of this article, but for now I will post some sample screenshots from Fallout 3, Crysis Warhead, Far Cry 2 and Team Fortress 2 (the only games I have installed right now) to try to show you how good these games can look at the settings I noted further above. Look closely at the fact that textures are clear and crisp, and there are no jaggies because of the high AA - note also the FPS counter at the top right:

Update: Aside from the HD video at the end of this article, you can now also see HD videos of various recent games at maximum possible settings on this system including: Far Cry 2, GTA IV and Brothers in Arms: Hell's Highway, demonstrating how smooth they run even with the significant performance hit of the FRAPS video recording software.

Even gaming at these sorts of resolutions and settings, the GTX 285 goes from its idle temperature of 41 - 43C only to a maximum of around 76C on my system, and happily I can report that the fan barely rises above its very silent idle noise even under full sustained load - it's the quietest system I've ever heard under full graphics load. This is one of the benefits of the GTX 285 over the previous GTX series, the revision to 55nm for this GPU has resulted in no need for a loud cooling solution. Best of all, the GTX 285 is unaffected by any crashes, quirks or other oddities in games because it's only a minor revision of a graphics architecture that's already been out for 6 months now (the GTX 200 series), and is a single GPU unit not reliant on SLI technology. It's the only card which satisfactorily meets all of my key priorities: stability, noise, longevity and performance. The price is fairly expensive, but reasonable given it costs less than its predecessor the GTX 280, but performs at least 10% better and is also more silent to boot. Of course if you already have a GTX 280 or even a GTX 260, upgrading to a GTX 285 is not really great value for money, but for someone with an older graphics card, I can highly recommend it.

For those wondering why I didn't choose an ATI card, well although the 4870 was cheaper, it was also a fair bit slower in the major gaming engines, and thus failed the longevity test. But that's not the main reason I didn't go ATI. I'll be blunt: I won't consider an ATI card right now quite simply because I have no faith at all in the ATI driver team. It has nothing to do with fanboyism, I honestly don't care if my graphics card has an ATI or Nvidia logo on it. The simple fact is that it appears that ATI is struggling right now because their parent company AMD has had poor fortunes against the dominant Intel CPU maker for over two years. First with Core 2, and now with Core i7, Intel has outpaced AMD completely, and greatly affected AMD's profitability. AMD's decline in turn appears to have affected their ongoing investment in ATI, which has historically had a much lower market share than Nvidia anyway. Looking at the latest Steam Hardware Survey for example, we can see that this is still the case, with Nvidia cards at roughly 64.5% of the market, while ATI has only 27.1%. This market power has given Nvidia the dollars and the clout to ensure that many games are developed specifically with Nvidia cards in mind, reducing the number of glitches Nvidia users experience out of the box, and also potentially increasing performance on Nvidia cards. Importantly, it also allows Nvidia to plow more money into driver development, resulting in more frequent, more functional, and less problematic drivers. This is particularly important for those running multi-GPU setups, because for optimal performance these configurations require proper driver support; without it they effectively perform similar to a single GPU setup at best, or experience slowdowns, stuttering and other issues at worst.

Now I know that this is a point of contention, because people will claim that Nvidia has its fair share of driver problems. This is absolutely true, there are driver problems for some Nvidia users. However based on plenty of research and experience, I'm familiar with how to determine whether a problem is user-based, driver-based or game-based. I definitely see a larger proportion of genuine problems with ATI drivers compared with Nvidia, especially for users of their flagship card the HD4870 X2. You don't need to take my word for it though, as professional reviewers have also noted the same thing. From this review posted recently on AnandTech:

In spite of the potential advantages offered by the Radeon 4870 X2, we have qualms about recommending it based on our experiences since October with the introduction of Core i7 and X58 and the multitude of software titles that were released. Driver support just isn't what it needs to be to really get behind an AMD single card dual-GPU solution right now.

Similarly, here's another review which is also quite recent:

Let me get one thing straight. I love ATI, and for the most part, I grew up on ATI cards. I loved seeing them strike back against NVIDIA last summer with their HD 4000 series... and who wouldn't? One decision that always bothered me though, was their needlessly bulky drivers, based on none other than .NET code. I'm not sure if the issues I ran into are related to that, but I'm sure it doesn't help. I'm assuming that I'm not the only one who's ever experienced these issues, because I've run into these on two completely different platforms with completely different GPU configurations. I'll leave NVIDIA's drivers out of this, but while they are not perfect either, I haven't experienced a major issue like this since at least the Vista launch. ATI really, really has to get the driver situation sorted out, because as I said, there's no way I'm the only frustrated user.

Here's yet another recent review which singles out ATI's drivers:

That brings us onto a greater point about AMDís driver strategy - while the one-driver-a-month strategy worked incredibly well when the company was making only single GPU graphics cards, it doesnít work so well when you throw dual-GPU graphics cards into the mix. Whenever a new game or graphics card comes out, an optimised profile needs to be created - with a one-driver-a-month policy, that can mean a leadtime of up to two months if the release comes at the wrong time in AMDís driver cycle like it did with the Radeon HD 4850 X2. On multi-GPU graphics cards that rely on driver profiles to deliver their maximum performance, we feel there is a need to move away from this rigid strategy because itís now doing more harm than good in our opinion.

To add to this, Nvidia also has some useful features in their drivers which ATI has not yet matched, including individual game profiles, and more recently, GPU-accelerated PhysX and CUDA support for things like physics and AI processing on the GPU. I can't put it any more succinctly than to say that good hardware is dependent on good drivers, and while I believe ATI definitely has some good hardware, I simply do not trust them to deliver good drivers on a regular basis to support the latest games properly the way I've come to expect from Nvidia. It's not about loving Nvidia or hating ATI, it's about my number one priority, which is stability and hassle-free operation. I've always had that with Nvidia drivers, so that is another major reason for choosing the GeForce GTX 285.

However all of this doesn't absolve either Nvidia or ATI of one hardware issue which has caused me some initial grief with this new system. The issue in question is an electronic buzzing/whining/squealing noise which can occur when the GPU is doing any sort of 3D rendering, whether in a window on the desktop, or a fullscreen 3D game. This issue has to do with voltage regulation, and is explained in detail in this article. My first encounter with this problem actually occurred in 2003 when I bought a Radeon 9700 Pro. It would emit a high-pitched squeal whenever I scrolled up or down a document or a web page. It was so annoying that I quickly sold the card and bought a 9800 Pro, which fixed the problem and was also an improvement in every other respect as well. It wasn't until I purchased and installed a Palit GeForce GTX 285 recently that I was again subjected to a similar, much louder buzzing, this time only during 3D games.

It's difficult to describe the noise, so I refer you to this YouTube video which demonstrates it. On my system the noise only occurred in some instances. For example, it was perfectly silent in all desktop usage, but would buzz noticeably in the Vista Chess Titans game whenever the Chess board spun by itself. Running the Rthdribl tech demo at any size or resolution would also result in a variable buzz/squeal. In most games it would be totally silent, yet in Crysis Warhead it would buzz - but only during the introductory movies and in the main game menu - and became completely silent during the actual game itself, even at maximum settings.

With the generous assistance of Arthur from MTech Computers, the retailer where I'd purchased the Palit card, I managed to get a replacement Palit GTX 285, but sadly, it also suffered from the exact same issue, so I returned that too. My PSU is both a high quality unit, and at 700W and with 56A on the +12V rail is more than enough for the card and my system as I demonstrated under the Power Supply section. The fact that the GPU would emit this noise even at very light load and at low temperatures also made it clear it wasn't a lack of power, heat or a fan-related issue. Given the GTX 285s are all based on the Nvidia reference design, I was in a bit of a dilemma as to which card to choose as a replacement. I did some more research and eventually ordered a third card, this time a Leadtek GTX 285. After installing it, I notice that the Leadtek card also exhibits some buzzing, but fortunately it is far, far less than the Palit card, and the only time it can be heard so far is in the main menu in Crysis Warhead - in every other game or situation it is thankfully completely silent.

Unfortunately it appears that virtually anyone can suffer from this problem, it's been going on for several years, and it's not just restricted to Nvidia cards - for example see here where ATI HD4870 X2 users are complaining about the same issue. There are several possible solutions/workarounds to this problem:

What bothers me most about this issue is that virtually none of the many professional reviews I've read mention this type of problem. Most recently, the only reference I've found to this issue was in this review on a lesser-known site where the reviewer explicitly says regarding a Gainward GTX 285:

While the fan on the heatsink didn't cause too much noise, the power circuitry of the card let me down once again. There is a buzzing sound coming from the card even at the desktop and the noise only gets worse when the card is loaded. The buzzing seems to be a problem that has plagued several different cards in the near history. To be honest the buzzing is more annoying than the sound of a fan, but luckily some of the noise is filtered out when the setup is placed inside a case.

The reviewer also lists this buzzing under the Cons section at the end of his article, so kudos to him for his honesty. It's a shame that the larger and supposedly more reputable review sites have for the most part decided to completely ignore this issue. It's impossible to imagine that of all the test setups and review samples out there, no major reviewer has ever run into this problem, and hasn't found it an annoyance. If they strive to accurately measure noise from the fan in decibels down to one decimal place as part of their review, it seems ridiculous that they would omit any mention of a loud variable buzzing noise from the GPU. This tends to confirm my suspicions that big review sites have to take care of the needs of the manufacturers first and foremost, rather than their audiences. In any case both the card manufacturers and reviewers need to address this situation. While the buzzing noise is hardly the end of the world, it's the equivalent of buying a luxury car and hearing a rattle in the dash; indicative of poor build quality and it leaves a bad taste in the buyer's mouth.

It's ironic that I ran into this issue during a time when I've made a lack of noise a top priority for my rig. It's probably no coincidence either, as some people with louder systems probably don't even notice the buzzing if it's low enough to be covered by fan noise for example. But in some respects it demonstrates that no matter how many reviews you read, you can always read some more to pick up on minor issues like this. Furthermore some level of general Google searching and reading user feedback is absolutely necessary to get more of an idea of the scope of such an issue. Ultimately though, at the moment there is no easy solution to this problem. It appears to be a lottery as to which cards will buzz on which systems, and it can afflict virtually any high-end graphics card currently on offer.

Anyway in my case, I'm now very happy with this GTX 285 and the type of gaming it allows me to do. There's no reason to go to a multi-GPU setup if a single GPU can do the job well at your resolution and settings, and in my case I expect to run this card for at least one to one and a half years without any need to turn down the settings.

On the next page we continue our look at the various components.